2025 AI Hardware Trends Reshape Custom Development

Most companies are approaching 2025 AI hardware trends backwards. They chase bigger GPUs and faster chips. They think raw compute is the only thing that matters. The result? Budgets burn fast. Little to show for it.

We see this story play out again and again. Flashy hardware upgrades. Stagnant results.

This year taught us something unexpected. The companies that got real ROI from AI weren't the ones with the biggest server farms. They were the ones who asked a different question: "What if we made our existing systems smarter at working together?"

That shift - from raw power to intelligent coordination - is what separates AI investments that pay off from those that become expensive regrets. The difference comes down to something most teams overlook - agentic protocols.

Why does this matter? The real value in 2025 isn't brute force. It's about smarter collaboration between agents. Better protocol design. Slashing wasted energy.

What Are Agentic Protocols, Really?

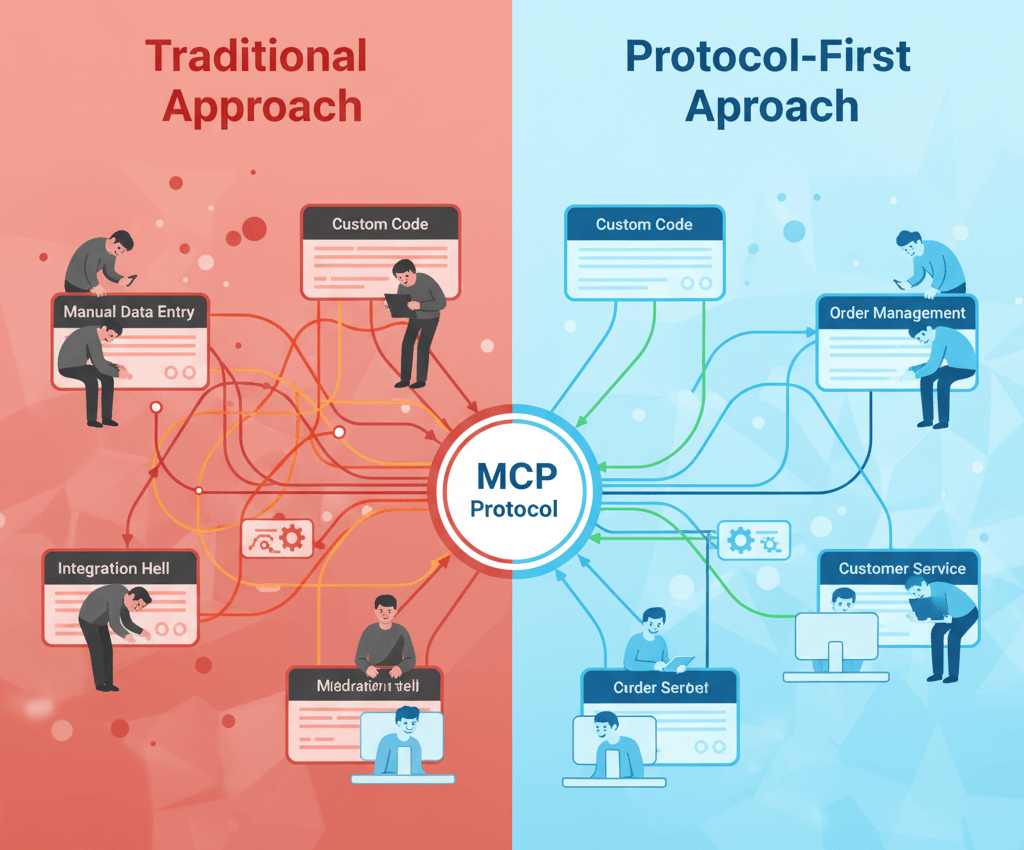

Think of traditional AI integration like hiring specialists who only speak different languages. Your CRM agent speaks one language. Your inventory system speaks another. Every interaction needs a translator (usually a developer writing custom code).

Agentic protocols like MCP (Model Context Protocol) and A2A (Agent-to-Agent) are more like establishing a common business language. They create standardized ways for AI agents to:

- Discover what other agents can do

- Negotiate who handles which tasks

- Share context without data duplication

- Escalate decisions when needed

Real-world example: A client's customer service team was drowning. Their chatbot could handle basic questions. But the moment someone asked about a refund? The bot would collect information, create a ticket, then stop. A human had to log into three different systems. Look up the order. Calculate the refund. Route it for approval.

Four hours. Minimum. Per refund request.

After implementing MCP standards, the chatbot agent could directly query their order management agent. Verify purchase details. Calculate refund amounts. Route approvals. All without custom API work. No human in the loop unless something unusual came up.

Resolution time dropped from 4 hours to 8 minutes.

The protocol didn't make individual components smarter. It made the system smarter by letting components work together autonomously.

Here's the shift that defined 2025 - AI value moved from "can we run this model?" to "can our agents solve this without us?" The companies winning this year invested in systems that get smarter at working together, not just systems that run faster in isolation.

The Hardware Revolution No One Saw Coming

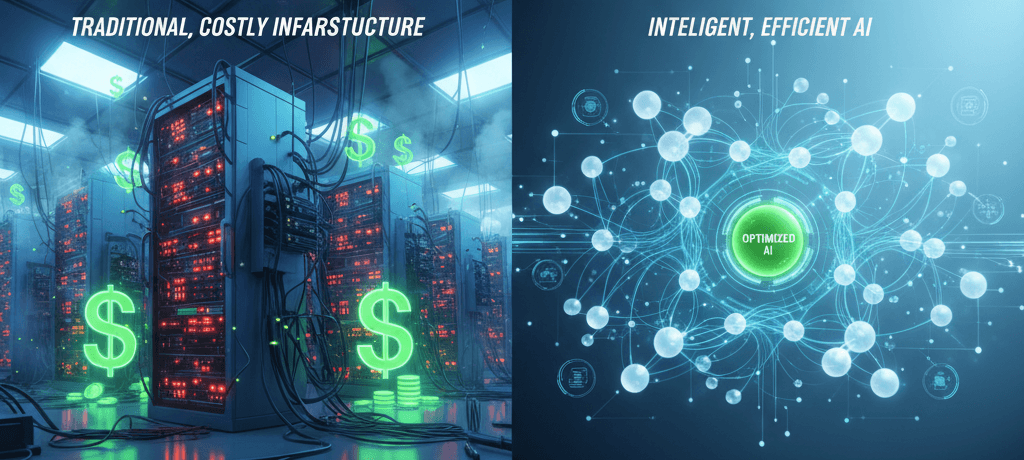

Hardware costs are dropping fast. Energy efficiency is climbing even faster. Stanford's AI Index Report (opens in new tab) 2025 found that hardware costs fell by 30% annually, while energy efficiency improved by 40% yearly.

But here's what caught everyone off guard.

The cost of sophisticated AI inference dropped (opens in new tab) over 280x between late 2022 and 2024. Think about that for a second. 280 times cheaper in two years. Models that needed 540 billion parameters in 2022 now achieve the same performance with 3.8 billion parameters - a 142-fold reduction. Edge AI hardware now handles workloads that required cloud infrastructure, cutting costs by up to 77%.

We saw this firsthand last spring with a regional logistics client. They came to us frustrated. Their route optimization was stuck in Excel hell. Manual updates. Hours of work. Constant errors.

The conventional approach would've been: migrate to AWS, spin up GPU clusters, subscribe to enterprise AI services. Budget: $150K+ for year one.

We took a different path. Open-source LLMs running locally. Basic protocol orchestration.

That's the shift. You no longer need Silicon Valley money to build next-gen automation. The democratization of AI isn't coming. It already happened. Most companies just haven't noticed yet.

When Protocols Matter More Than Processing Power

Conventional wisdom says protocol stacks don't matter. It's all about which model you pick. We think that's wrong. Here's what most miss.

Protocols like MCP, A2A, and ACP are the new rails for building (opens in new tab) scalable agentic AI systems. They help you build at speed and scale.

MCP standardizes how agents coordinate tasks. A2A lets autonomous services negotiate directly with each other. No human bottleneck required.

Builder reality: Last year, our n8n workflows hit scaling limits around 12-15 interconnected agents. Each new integration meant writing custom code to handle data mapping, authentication, and error states. We were spending 15-20 hours monthly just maintaining connections between agents that should have been working together automatically. Once we adopted basic MCP standards, everything changed. New agents could automatically discover existing ones. Orchestrating distributed automations became routine. As easy as setting up an email trigger or webhook.

Not every system needs protocol-first architecture. However, if you're building more than a handful of AI agents that need to work together, ignoring these standards means more custom code, a greater maintenance burden, and slower scaling. The companies moving fastest in 2026 will be the ones that adopted these patterns early.

The lesson from the front lines? The hardware race isn't just about chips. It's about who can orchestrate swarms of agents cheaply and reliably on any available hardware footprint.

In custom dev today, survival means adapting fast. From n8n flows to bespoke automations. To ride this wave instead of getting swept beneath it.

When Hardware Alone Isn't Enough

The Pitfalls of Chasing Raw Compute

We watched a client's IT lead beam over their new racks. Rows of humming servers lighting up a dark data center. Six months later? Their biggest win that quarter came from integrating agentic protocols. Not from running bigger models on those chips.

They realized only after spending hundreds of thousands that compute alone doesn't orchestrate value. It just burns electricity.

This isn't an isolated story. Stanford's official 2025 AI Index Report (opens in new tab) notes a surge in AI adoption to 78% organizationally in 2024 (up from 55%), with optimism rising 10% in skeptical countries like Germany and France since 2022. Public positivity also grew in Canada (+8%), Great Britain (+8%), and the US (+4%).

Ignoring Agentic Protocols: A Costly Mistake

The real disruptor in 2025 isn't just cheap hardware. It's agentic protocols like MCP and A2A reshaping how systems work together. Speed alone is no longer the edge. Coordination is the true differentiator now.

This isn't theoretical. Gartner calls Agentic AI (opens in new tab) the top trend for enterprise transformation this year. Because these protocols unlock autonomy at scale.

Ignoring these advances is a mistake we see too often. In 2026, leaders who chase hardware specs instead of integrating agentic protocols will be left behind. Watching more nimble competitors automate circles around them. While their data centers glow quietly in the dark.

What 2026 Actually Looks Like

We're heading into 2026 with a clearer picture than we've ever had. The winners won't be those with the shiniest servers. They'll be the ones who mastered coordination. Who understand that every watt saved and every process automated through intelligent orchestration is worth more than another teraflop on paper.

The status quo says: "Wait for cheaper chips."

We say: "Use today's efficiency to solve your hardest problem now."

Because here's what most miss. The hardware has already gotten cheap enough. The models are already good enough. The protocols already exist. The only question is whether you're architecting for the reality of 2026 or still building for the fantasy of 2023.

If you’re reading this and wondering where your own stack stands, here’s the question worth asking:

What would change if your AI systems could actually work together? Not through custom code you maintain forever, but through protocols that make coordination automatic.

What problems would solve themselves?

What bottlenecks would vanish?

What possibilities would open up?

If you're curious what that would look like in your environment, let's talk (opens in new tab).